The autonomous car of the future.

Many Americans are leery of self-driving vehicles because putting our lives — and the lives of our loved ones — in the hands of artificial intelligence is too big a leap for many right now. In fact, three out of four Americans are afraid of self-driving vehicles, according to a recent AAA study.

It’s not that human-controlled driving today is terribly unsafe. There are 1.49 deaths per 100 million miles driven, according to the National Safety Council (NSC). Still, the Association for Safe International Road Travel reported that more than 38,000 people on average die every year in crashes on U.S. roadways.

Many believe that for autonomous vehicles to be accepted into the mainstream, they must be far safer than human-controlled vehicles. In fact, they should strive to be safer than air travel, which is remarkably safe. In all of 2018, there were 393 civil aviation deaths. However, only one of these deaths involved a commercial airline, according to the National Safety Council.

The reason autonomous driving must be substantially safer is twofold: Many people are already afraid of self-driving vehicles, and when there is an accident, we are disproportionately influenced by the news.

The story of the self-driving Uber that caused a pedestrian fatality has nearly 25,000 mentions on Google lamenting the crash—and people have long memories.

That’s why autonomous driving must adopt a “zero autonomous driving accidents vision” to achieve mainstream acceptance for Level 4 or Level 5 autonomous capabilities.

5 levels of autonomous driving

- Level 1: One aspect is automated

- Level 2: Two or more elements are automatic

- Level 3: Car is controlling safety-critical functions

- Level 4: Fully autonomous in controlled areas

- Level 5: Car is fully autonomous

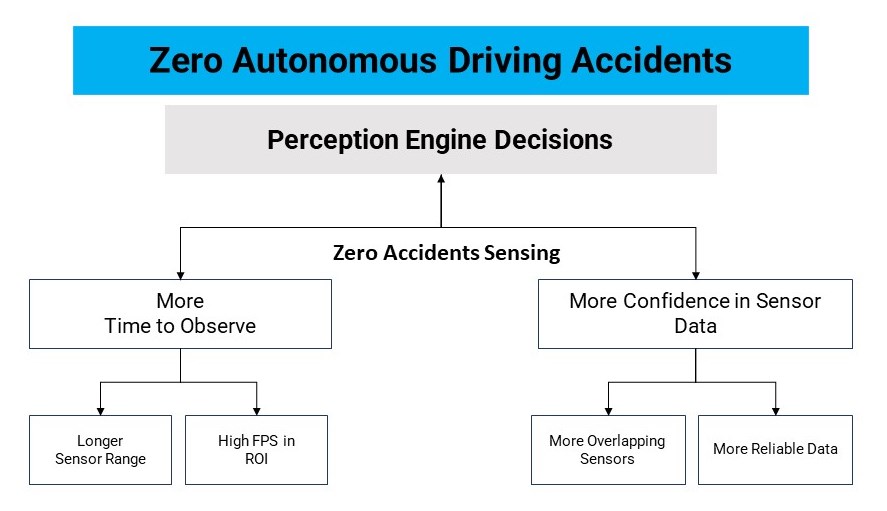

How do we get to zero autonomous driving accidents? Countless teams across the globe have been studying this issue and, more specifically, what kind of sensors are needed to provide sufficient reaction time, generally considered seven-plus seconds for a perception engine to safely navigate complex scenarios and avoid all accidents.

The bottom line is the perception engine needs more time to observe and needs to have more confidence in the sensor data.

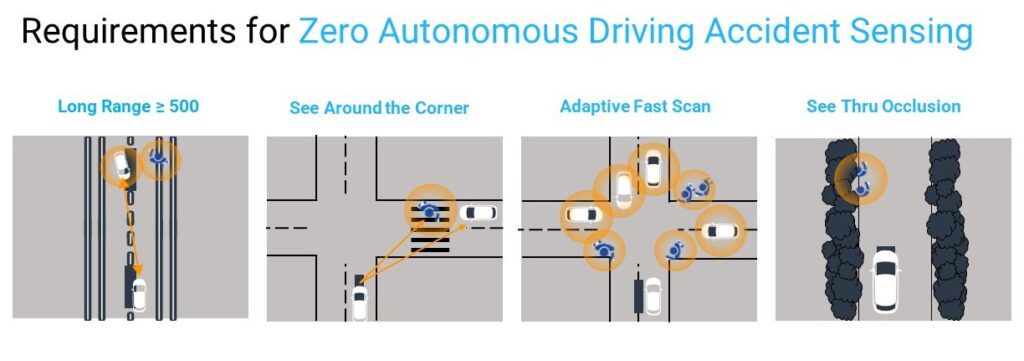

In analyzing accidents where fatalities occur, there are four areas where car sensors must improve radically — under all weather conditions — to reach zero autonomous driving accidents:

- High Speed: Vehicles moving at very high speed in opposite directions on a collision trajectory.

- Around Corners: Detecting pedestrians, especially children, around corners or occluded by other objects or vehicles.

- Fast Scanning: Tracking hundreds of targets at a very high frame rate.

- Blocked View: Detecting vehicles and pedestrians in a rural area with significant vegetation growth occluding line-of-sight sensors like LiDARs and cameras.

Finally, it’s critical that the sensor continually and adaptively interrogate and dwell on regions of interests (RoI) at high frame rates simultaneously and synchronously with the other sensors to capture subtle movements.

Certainly, companies are diligently working on these issues. Primarily, there are three methods being used, and each has substantial merit and limitations:

- Cameras: They provide high-resolution images, but they lack native depth information and depend on light conditions.

- Radar: It measures velocity with great precision, but it has trouble detecting static objects and measuring the position of objects due to low resolution.

- LiDAR: It’s the only sensor to provide native 3D data, but high-performance LiDAR solutions can perform poorly in adverse weather conditions such as heavy rain, fog or snow.

There are proponents and detractors for each. Elon Musk famously said: “LiDAR is a fool’s errand. Anyone relying on LiDAR is doomed.” He went on to explain that he believes they are expensive and unnecessary.

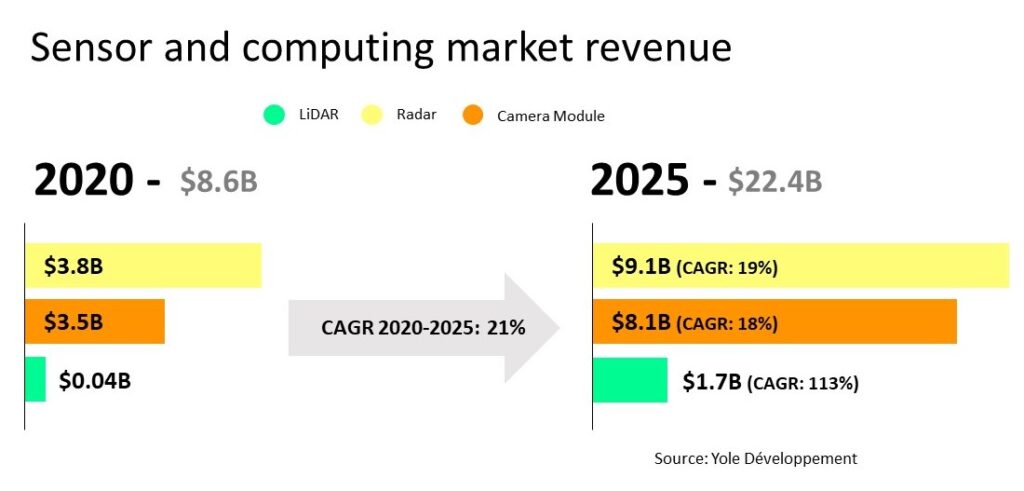

Most agree there is a place for all these technologies, and analysts forecast each of these technologies will enjoy a boom in the coming years. For instance, the market for radar and LiDAR will more than double by 2025 to over $25 billion, according to the analyst group Yole Développement.

To reach zero autonomous driving accidents, the Neural Propulsion Systems team argues that both LiDAR and radar must undergo radical improvements and then LiDAR, radar and cameras must all three be fused into a single system.

LiDAR and radar innovation must occur to address the two most formidable roadblocks to reach Level 5 autonomous driving: distance and corners.

Most LiDAR systems are only capable of 250 meters, and they must achieve 500 meters to reach the seven-plus seconds necessary for the perception engine to make the correct judgment call. Also, improved radar can enable cars to know what’s around a corner, which is immensely important to preventing accidents. Working with a team of thought leaders in radar and LiDAR, we have overcome both these challenges.

With these issues addressed, combining the strengths of LiDAR, radar and cameras to create an AI-powered deep sensor-fusion system that leverages the notable capabilities of each technology—while overcoming the weaknesses—is an approach that would get us near-zero autonomous driving accidents.

Ultimately, for these autonomous vehicle sensors to gain mainstream adoption, it’s paramount that they achieve and maintain an impeccable safety record. Secondarily, they must be reliable and easy to repair. Lastly, as implementations ramp, they will become more affordable and smaller, which can facilitate even greater proliferation.

For most Americans, self-driving cars will have to be far safer than their human-steered counterparts before they will let go of the wheel. Fortunately for all of us, technology is advancing—while remaining affordable—to get us to zero autonomous driving accidents.

About Dr. Behrooz Rezvani

Behrooz Rezvani is a serial entrepreneur and currently founder and CEO of Neural Propulsions Systems, Inc., a pioneer in autonomous driving and sensing pl

atforms.

Previously he was founder and CEO of Seyyer, focused on Artificial Intelligence and Machine Learning technology. He was the co-founder of Quantenna, an industry-leading Wi-Fi technology company through a very successful IPO that was purchased by ON Semiconductor for $1B in 2019.

He was the founder of Ikanos Communications, a leader in DSL modem/infrastructure IC and home gateways, and led a successful IPO, which was later purchased by Qualcomm.

More Stories

Cybord warns of dangers of the stability illusion

Avery Dennison PSA tapes support rapid evolution of EV batteries

Sensor Fusion and the Next Generation of Autonomous Driving Systems