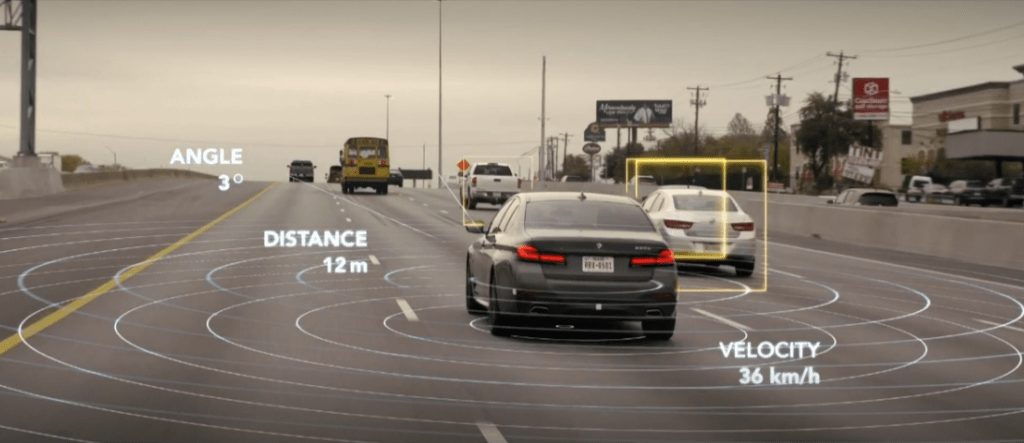

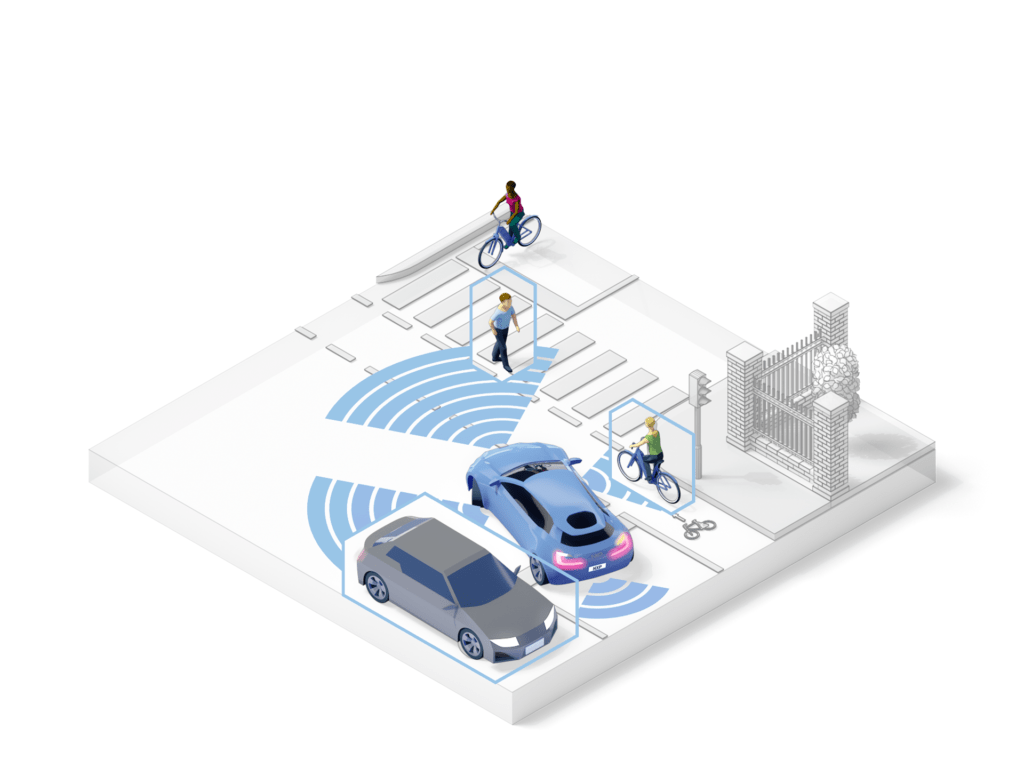

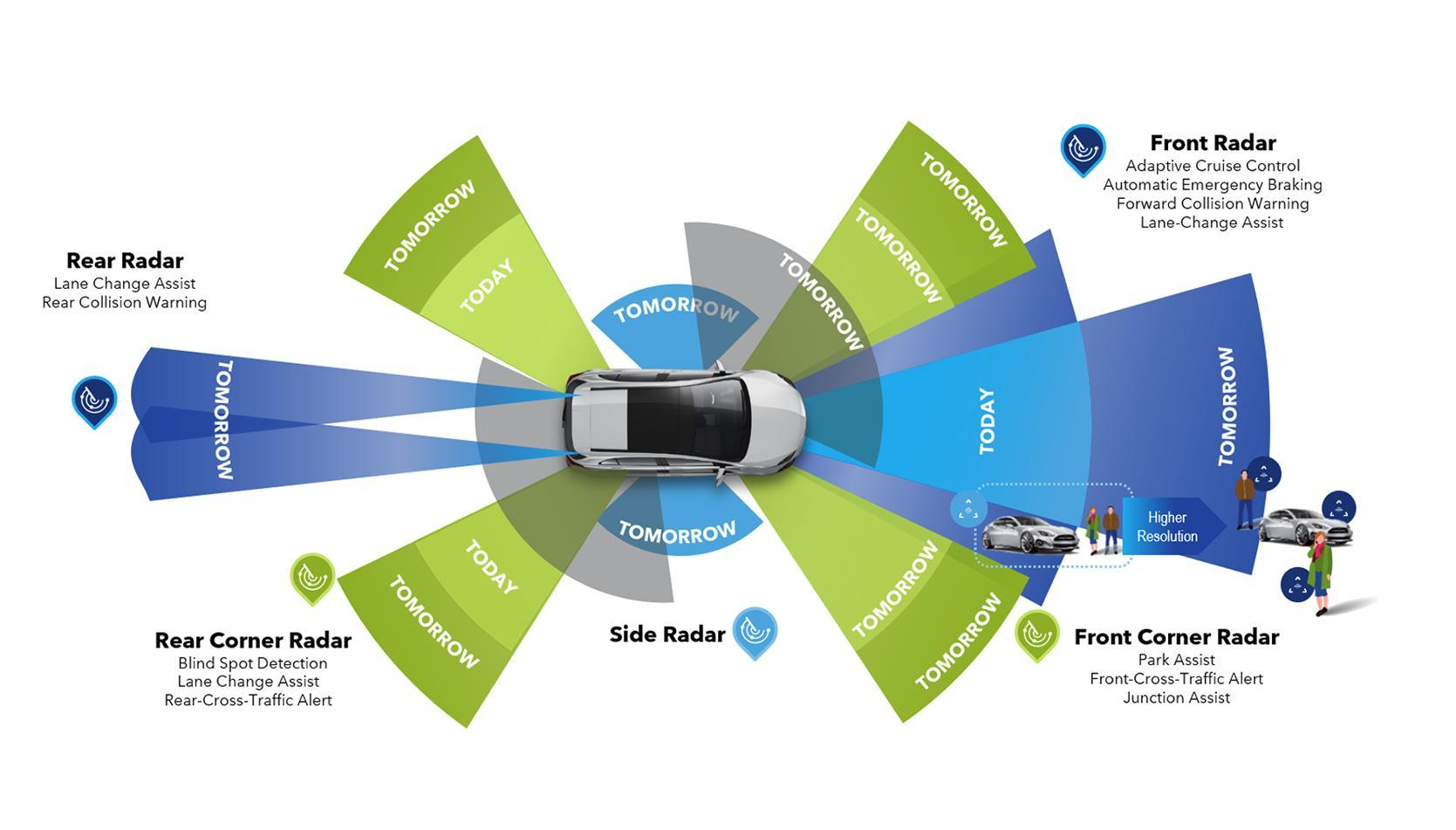

Multiple radar sensors create a 360 degree safety cocoon.

A new generation of radar sensors and software is allowing the technology to deliver the high quality of data needed for assisted driving.

Automotive Industries (AI) asked Matthias Feulner, Senior Director ADAS at NXP Semiconductors, how the company strikes the optimal balance between requirements for increased sensor capabilities, smaller form factors and power efficiency for the next generation of radar solutions.

Feulner: Use cases for automotive radar have evolved into three distinct segments. They are corner radar sensors, long-range front radar sensors, and high-resolution imaging radar sensors. Each has unique requirements. Consequently, there is a need for tailoring to the individual use case. We are addressing this by offering a scalable family of radar chipsets.

The new SAF 85xx is a family of single chip radar devices which are implemented in 28 nanometer (nm) RF CMOS. This is the first 28 nm RF CMOS single chip on the market. Other single chip

devices are commonly done in 40 or 45 nanometer technology.

What this gives us is superior RF performance that is two times better than the previous generation. So, we can see farther down the road and make use of this single chip for long range front radar. But at the same time, we can detect and separate smaller objects from one another, or separate small objects from large objects like a pedestrian from a parked car, or bicycle rider or motorcycle rider or from a truck.

Another advantage is significant space reduction. And that is important, because tomorrow’s vehicles will have a lot more radar sensors for so-called 360-degree radar safety cocoons. At the same time car OEMs want the sensors to blend in, which means fitting into constrained spaces. They also need to use less power in order to reduce the load on the battery, particularly important for electrical vehicles.

AI: What are other advantages?

Feulner: Traditionally radars have operated in a 3D mode. We are adding a fourth dimension, which is the elevation. The 28 nanometer 1-chip is our sixth-generation automotive radar and third generation of RFCMOS radar technology. Generation three brings gives us twice the RF performance of the previous generation.

And we get a 40% compute performance increase of previous generation radar MCUs.

By increasing the transmitter output power and reducing receiver noise we can now look even farther and detect smaller objects. Consequently, we expect to achieve a range of 300 to 400 meters. We also reduced phase noise, which is important because a high noise level can easily mask smaller objects, like vulnerable road users (VRUs) which reflect only little of the Radar signal.

Another improvement is the ability to make use of multiple input, multiple output (MIMO) waveforms.

There are four transmit antennas on the chip, each of which can be assigned different tasks that are carried out simultaneously. Three antennas can be used to sense in the horizontal plane, with the fourth sensing the vertical dimension Alternatively, you can dedicate some of the antennas to short range sensing in the immediate environment of the car, while using the others for longer range sensing.

AI: What critical safety applications will benefit from the one-chip solution?

Feulner: Most of the vehicles on the road in 2022 are equipped with three radar sensors. One in the front is used for applications such as automated emergency braking, forward collision warning, and adaptive cruise control. Two rear corner sensors are commonly used for blind spot detection. Come 2025, we expect the average mid-range vehicle to have five radar sensors. Premium vehicles will have 10 or more.

They will be used for functions such as lane change assist, cross traffic alert, and park assist.

Previously, the motivation behind fitting sensors was primarily to meet safety regulations like NCAP and NHTSA.

Going forward, OEMs are looking to introduce more comfort features they can monetize by selling services and upgrades to generate a continuous revenue stream after the vehicle has been delivered. So, w

e see a lot more sensors being fitted from 2025, regardless of whether the buyer wants the feature at the time of purchase. If the vehicle is already fully equipped with the necessary hardware the features can later be sold as upgrades or subscriptions.

AI: Are the platform and advanced radar software scalable?

Feulner: The platform is scalable in multiple ways. Our approach to automotive radar is to use common IPs on the processing side, on the one hand, and on the millimeter wave side, on the other hand, in order to tailor to the individual use case, while allowing customers for optimum reuse and for reduced R&D investment, because the IPs are a common.

On the software side we have invested significantly into developing in-house software that optimally uses the silicon IP. Towards that end we have developed advanced radar algorithms that help with challenges like interference mitigation. According to market research estimates hundreds of millions of automotive radar sensors are fitted to the next generations of vehicles, thus increasing the chances that another vehicle with a radar sensor might be blinding the sensor on your own. Consequently, having reliable interference detection and mitigation is becoming increasingly important.

We can apply software upgrades to improve the sensors capability to deal with interference.

We have also developed specific algorithms for high resolution processing in order to enhance the resolution through additional post processing on top of hardware design. In radar, the sensor resolution scales with a number of antennas. The more antennas you have, the better the resolution of the sensor, but of course at some point that is not economical anymore. Consequently, we have invested in radar algorithms that enhance sensor resolution by means of digital post-processing and that could be added as a feature, such as park assist.

AI: Is it future proof?

Feulner: Today most radar systems use edge processing, which means most of the processing happens at the sensor. Data, usually detections or objects, is transmitted through a lowi-speed interface such as a CAN bus or 100-Megabit Ethernet to the ADAS main controller. From 2026 onwards we believe there will be a bi-furcation and vehicle architectures will be emerging where only part of the processing may be done at the sensor. Lower-level data will be transferred through a higher speed interface to a zonalized or centralized controller where post-processing will be done.

It will be a relatively slow transition though – we predict that in 2030, 70% of vehicles shipped – in particular, those in the more cost-sensitive volume segment – will continue to use edge processing. Around 30%, starting with premium vehicles, will have some sort of centralized or zonalized processing. We are preparing ourselves to support both architectures with seamless transition options, because the OEMs have different transition paths, depending on their brand and model portfolio.

So, our scalable platform will support both edge process sensors, as well as those where processing is distributed between the sensor and a central controller.

Given that we provide both the radar front end as well as high performance radar processors, we think we are well suited to work with the OEMs to make this transition and to find the optimum partitioning that allows them to get the result which is an improved sensor data fusion, but at the same time to keep costs in check because lower level sensor data and higher speed connectivity can result in a cost premium, hence transferring raw sensor data would not be practical.

The right balance needs to be struck and the sweet spot needs to be found.

Ai: What’s next for NXP?

Feulner: We are getting to the point where multiple radar sensors in a vehicle act as a 360-degree safety cocoon which allows us to detect, separate and classify objects very accurately. This is made possible by the level of detail modern sensors can detect. Artificial intelligence and machine learning may further improve the interpretation of the combined data that multiple radar sensors deliver, hence enabling radar-based perception and extending possible uses for future safety and comfort features.

More Stories

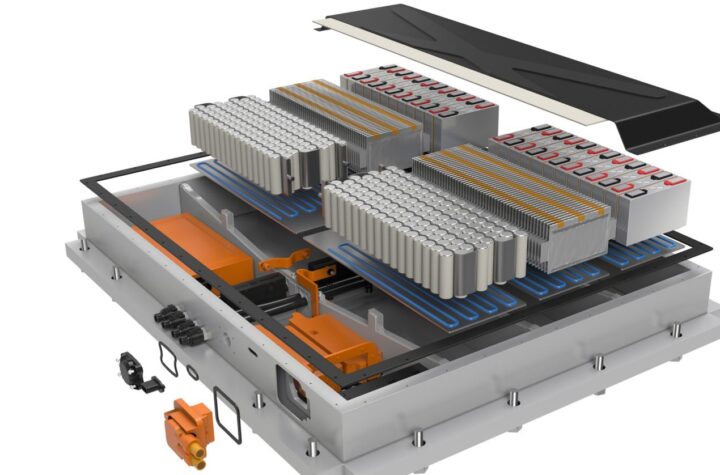

DuPont materials science advances next generation of EV batteries at The Battery Show

How a Truck Driver Can Avoid Mistakes That Lead to Truck Accidents

Car Crash Types Explained: From Rear-End to Head-On Collisions